The stubborn persistence of online-offline inconsistency

The most preventable bugs are surely the most infuriating

If you build ML models for production use cases, such as real-time ranking, recommendations, content extraction, or spam filtering, then it’s quite likely that your implementation for real-time serving to users (“online”) differs in some substantial ways from the setting in which you built your models (“offline” or “development”). This is in contrast to modeling situations that don’t depend on recency, such that we could run models in batch and then use their static predictions until the next run of the model.

We build models, and then we apply them to the world. Sometimes the results of the first stage aren’t matched in the second. This has many names, including “training-serving skew” and “online-offline discrepancy”.

I want to zoom in on one specific subclass of that wide bug class: features that are inadvertently computed differently between the two settings. That’s what I’m calling “online-offline feature mismatch”. These are some of the bugs that are most trivially easy to verify, yet we continue to create these bugs over and over again. Unsurprisingly, given my well-documented fascination with bug patterns, I have some thoughts on this one.

A recent story from DoorDash

I am motivated by a recent blog at DoorDash [1]. I love blogs like that, which investigate bugs and provide real details about the messy world of production machine learning. The blog goes beyond rhetoric, with metrics—tables of them!—and Matplotlib graphs. Lots of detail to sink my teeth into.

Their setting is restaurant ads on DoorDash. They predict multiple (non-exclusive) labels. Although they don’t tell us what those labels are, we could imagine them including outcomes like whether users click on the ad. That’s an important business problem for DoorDash, and modeling these ads have been a fruitful investment for DoorDash with at least five successful iterations across 2023 and 2024. That includes the model iteration that they wrote about in the blog, which added over 40 new consumer engagement features to the model.

Adding 40 features sounds great, but it turns out that most of their features had implementation issues. And not just from one problem, but most were subject to two separate implementation mistakes.

Feature staleness: Their offline features were constructed by taking the prior day’s value for each. But their online features were 2, 3, or 4 days out of date.

Improper persistence of values: Their online feature store doesn’t overwrite existing values if the new value is supposed to be null. Over time this means that features accumulate non-null values, even if they should typically be null.1

I find these bugs fascinating. The first one fascinates me because it is the opposite direction of what I usually see: I’m used to features that update in near real time in production but where modelers approximate them offline with a one day lag.2 The second one is just such an obvious case of a “feature store” that was hacked together out of something with a different purpose than hosting features (maybe that something is Redis with the purpose of caching).

The situation isn’t any one person’s fault. Those bugs are downstream of unexpected technical choices in infrastructure. Likely those choices were appropriate consequences of DoorDash’s scale and growth, but they resulted in ML infrastructure that does not match all the expectations and needs of ML practitioners.

All of their top 10 new features (of the 40+) were subject to both problems. The 10 had between 4.38% to 35.7% wrong values due to staleness,3 and 1.15% to 45.6% improperly persisted non-nulls.4

The features were wrong. Very wrong.

Some sympathy

I don’t highlight DoorDash’s story because of its rarity, but rather its ubiquity.5 Everybody has an online-offline discrepancy sooner or later. When Shankar et al. (2022) interviewed practitioners on their ML deployment practices, the topic cited by the most practitioners was “data drift/shift/skew” [2].6 The very first issue they highlight under “Pain points in Production ML” is “Mismatch Between Development and Production Environments”.

Despite the problem being widely known and having some reasonable solutions, we still can’t get rid of it.

Very recently at my work one team had an incident of online-offline feature mismatch, although to a lesser extent. As much as they tried to have shared code for online and offline implementations, at the slim boundary between data sources and shared code there was a problem with translation and encoding of null values. That issue delayed a launch.

Why is this so hard?

We want to ensure that offline features exactly match their online computations. Basic transcription errors can result in code differences between online and offline implementations, which might be updated manually or not written in the same programming language. So everyone tries to increasingly unify online and offline calculations, having calculation code be structurally defined as the same online and offline. Sometimes that’s done with one piece of code used in both places.

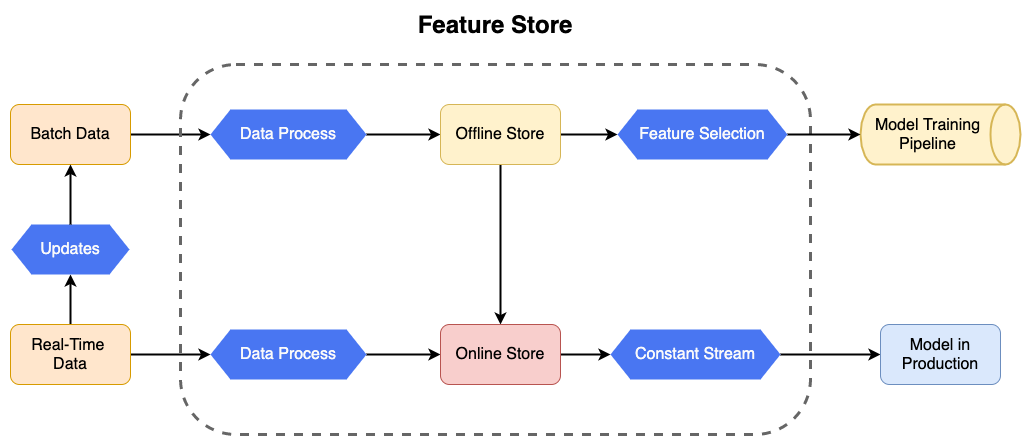

In the above image about Airbnb’s feature store, Chronon, features are defined with a source-of-truth through a domain specific language (DSL), such that they have only one definition.

Using the same code or the same specification is still subject to boundaries, like in the example I gave from work where null representation differed in the two feature sources. Feature stores that want to eliminate that issue, like Chronon, have to do a lot of work under the hood to understand the data sources and their properties.

A simpler way to eliminate variation is to calculate the features offline and then copy (“materialize”) them online for use in the online model. This ensures both that the core calculations are the same and the data sources are the same.

Firstly, that doesn’t solve for features that cannot be precomputed because their prerequisite user activity happens immediately before calling the model, such as incorporating search queries into a search engine’s ranking models. In the above diagram, see how some real-time data has a path to the online store that bypasses the offline store.

Secondly, this design wouldn’t (and didn’t?) solve either of DoorDash’s problems: a staleness bug in feature updates meant that the online feature store didn’t hold the values they intended it to, and online feature availability had an unexpected lag.

Furthermore, even when only needing precomputable features and correctly unifying online and offline feature calculations, it is hard to truly replicate the nuance of the differing environments. Consider this excellent blog from Pinterest, where they had an issue due to timeouts from the online feature service. Would you solve that by constructing artificial timeouts in your offline feature store?

A simple solution…

We are not restricted to some combination of a) having consistent calculation between two environments, or b) trying to dependably migrate features from offline to online. We also have the option of c) using logs of online features as the source for our future offline modeling efforts. This works both for features materialized from offline and for features calculated entirely online. Regardless of their source, we can log them all, ideally as close as possible to where they are provided to the online ML model. Then when modeling offline we simply fetch those features from the logs for each observation. The logs form a natural corpus. There is a bit of nuance about correctly logging unique identifiers and joining with labels, but if we get it right once then it’s solved forever.

This would have solved DoorDash’s mismatch, as offline modeling would use the correctly lagged features that were persistently stale. DoorDash would still have the problem that multi-day lag and feature staleness should lead to less useful features, but at least they wouldn’t have mismatch and falsely believe that they had stronger models.

This would similarly help—partially—with Pinterest’s problem. While the timeouts would be stochastic and likely not predicted by other features, at least those timeouts would be represented in feature distributions. Which could also draw attention to that problem.

A simple solution…that nobody likes

Why doesn’t everybody do this, for all models? The issue is how to handle new features. That’s often the point of new models in the first place, such as in DoorDash’s case where they added 40 new features. Those new features won’t be represented in logs from the incumbent model.

Does that send us back to the drawing board? Not always: we can add the new features online and calculate them while still using the old model, and then wait while a corpus accumulates. Once we have enough data, then we can build the new model.

In some settings, where it takes months or years to accumulate suitable sample, this isn’t a realistic option. But in high volume settings or where recency might be an important attribute of your modeling, waiting a week or two might be fine. Both of those properties sound like they might hold for DoorDash, where they have a plethora of searches, with immediate outcomes for labels, and where new food trends might affect user behavior.

So what did the team at DoorDash think of this option? They wave it away, since “there’s a clear trade-off between development speed and data accuracy, which needs careful consideration during the project planning stage” [1].

Well! I’m not so sure about the trade-off here. Does their development speed account for the time they spent building models from mismatched features? And investigating and rebuilding the models? What about the performance costs of years of smaller amounts of mismatch before that?

They might want to build a better case for that conclusion.

Even that cautionary paper by Shankar et al. calls out the “tension” here, that “development cycles are more experimental and move faster than production cycles” [2]. Although they go on to acknowledge that early validation doesn’t succeed if the environments are suitably different. As I sometimes like to say: activity does not imply productivity. A team being busy does not necessarily mean that they are maximizing their effectiveness.

Waiting out sample accumulation for new features does not have to be unproductive. If you create regular cycles of feature ideation and model building, you might always have some new features that have freshly accumulated enough sample.

For a long time, one of my teams at work operated with this approach to features. That didn’t mean we sat on our hands while waiting for sample. Instead we often split projects into sub-projects, with feature ideation and implementation at an earlier stage before model building, and then later the model building using those features and any other features that had been developed. In between that we would have other relevant work, such that everyone stayed active. Sometimes while we accumulated data for a new set of features we simultaneously worked on a model using the last batch of features that we added.

This approach worked well, with productive model building without reversals from online-offline feature mismatch.

Alas, it was not to last. Not everyone liked it. People wanted to build models from their own new features, not from the recently-maturing features from others; likewise, they didn’t want to design new features and then have someone else build the models that used them. They didn’t like that decoupling, and they didn’t like waiting. As engineers it feels wrong to have any part of a process contingent on waiting for human time to pass linearly.

Modelers started to ask the reasonable question: why can’t we engineer our way out of waiting? It can’t be that hard to create the features the same online as offline, right? Mismatch is a known problem now, surely we won’t fall into that obvious trap?

In the end, after debating the pros and cons enough times, we eventually decided that changing would be better and worth the implementation effort. We created a simple offline SQL-based feature store with reliable (timestamp-based) backfills and reliable online materialization. Many of our most complicated features could be defined in that feature store, which made it easier for modelers to develop new features in the comfort of SQL. So we switched regimes and stopped relying on online feature logs except for features that relied on real-time data in the session.

On one hand, we can test new features earlier and avoid decoupling a modeling project into two parts. On the other hand, we have had a few mismatch bugs since then.7 It turns out we’re not infallible after all.

This change probably nets out as being worth it, not least because it put the discussion to rest.8

Wrapping up

By their very nature, real-time ML systems differ substantially from the non-real time development environments we use for building those models. We can struggle with something as simple as constructing features exactly the same way. Textbook solutions abound—and I haven’t covered them all here either—but they all have drawbacks and are truly tested by high scale systems managed by teams that rapidly ship new models.

If nothing else, the prevalence of such a simple bug class should increase our humility. There are many other bug classes or unexpected model behaviors that are more complex, harder to notice, and not always verifiable. When a model succeeds or fails when challenging an incumbent model, it might not be for the reasons we expect.

[1] Song, H., Miao, X., Kumar, U. (2024). How to investigate the online vs offline performance for DNN models. https://careersatdoordash.com/blog/how-to-investigate-the-online-vs-offline-performance-for-dnn-models/.

[2] Shankar, S., Garcia, R., Hellerstein, J.M., & Parameswaran, A.G. (2022). Operationalizing Machine Learning: An Interview Study. ArXiv, abs/2209.09125.

[3] Simha, Nikhil (2023). Chronon — A Declarative Feature Engineering Framework. https://medium.com/airbnb-engineering/chronon-a-declarative-feature-engineering-framework-b7b8ce796e04.

[4] Leite, Guilherme (2021). mlops-guide (🛠 MLOps end-to-end guide and tutorial website, using IBM Watson, DVC, CML, Terraform, Github Actions and more.). https://mlops-guide.github.io.

They call this “cached residuals”; the less said about that name, the better.

Matching on the exact day can allow for improperly future-looking features.

Although there are several caveats on how this is measured (the authors mention to take it “with a grain of salt”) and in ambiguity in how to interpret Table 4.

I’m not sure about the overlap in these metrics or whether the former is denominated by all values or all non-null values.

Well, it is rare in how extreme their problem was. So, a bit of both?

See table 3.

That we know of. I wouldn’t rule out unknown mismatch bugs, despite our best efforts at monitoring. Note that DoorDash only identified their problem because of dramatic AUC differences between their online and offline analysis.

If I sound conflicted on this, it’s because I am. I’m the simplicity guy. Simple is good. Broken is bad. I have a strong belief in the benefits of removing distractions from bugs and incorrect analysis from unreliable data. I don’t think people internalize the costs that they impose on future modelers with broken data or on the company from an inefficient path through launches or through incorrectly learning invalid lessons. But a recurring debate is also a distraction, and there are switching costs from splitting projects into two phases or handing off between people.